Researchers discovered ‘AI (significantly giant language fashions, or LLMs) could alter the character of their work.

The examine was revealed in Journal, ‘Science.’

Grossmann and colleagues noticed that enormous language fashions educated on huge quantities of textual content information have gotten extra able to simulating human-like responses and behavior. This opens up new avenues for testing theories and hypotheses about human behaviour at unprecedented scale and velocity.

“What we wished to discover on this article is how social science analysis practices will be tailored, even reinvented, to harness the facility of AI,” stated Igor Grossmann, professor of psychology at Waterloo.

Historically, social sciences depend on a spread of strategies, together with questionnaires, behavioural assessments, observational research, and experiments. A typical purpose in social science analysis is to acquire a generalized illustration of the traits of people, teams, cultures, and their dynamics. With the appearance of superior AI techniques, the panorama of knowledge assortment in social sciences could shift.

“AI fashions can signify an enormous array of human experiences and views, probably giving them the next diploma of freedom to generate numerous responses than typical human participant strategies, which might help to scale back generalizability considerations in analysis,” stated Grossmann.

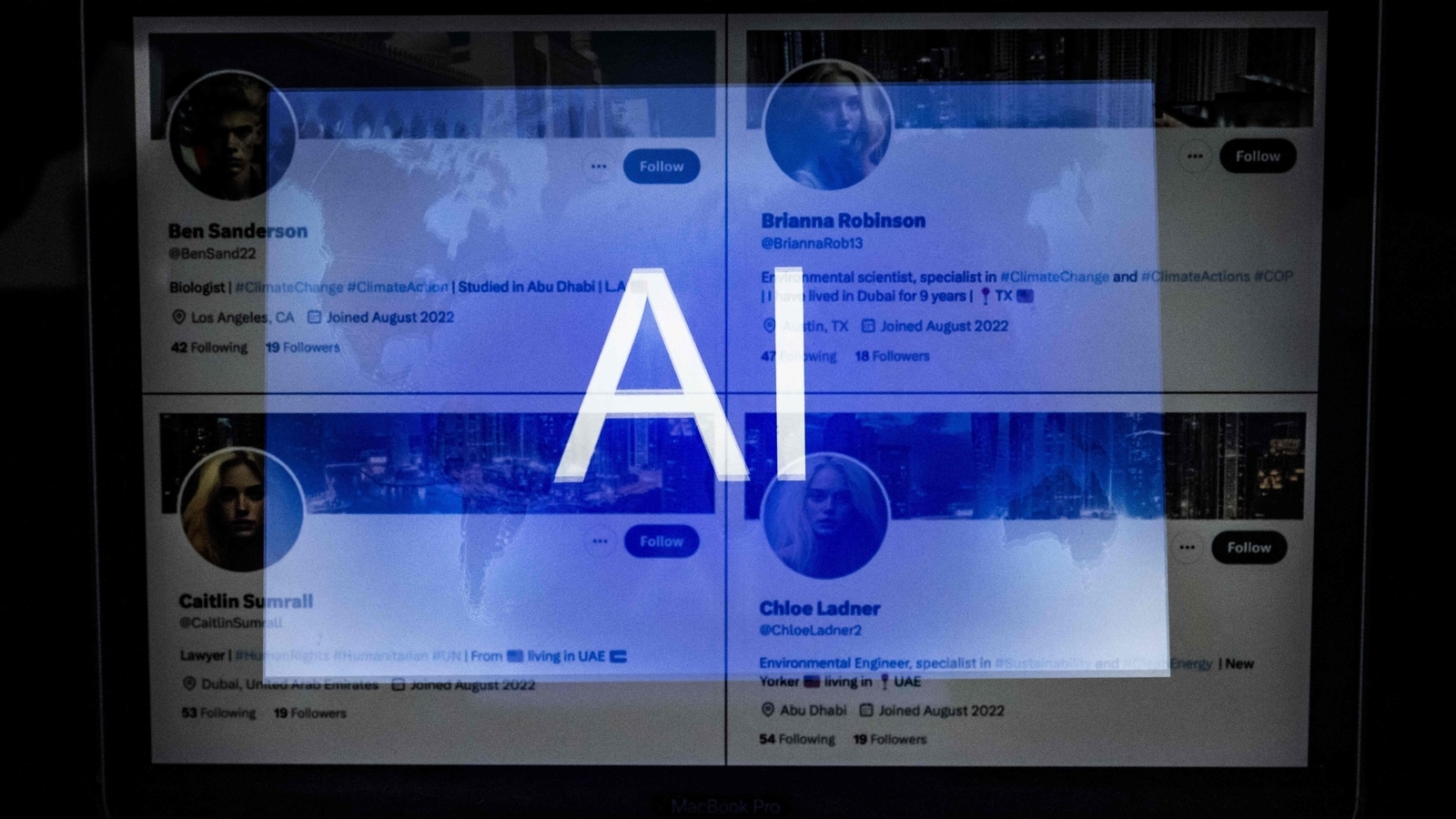

“LLMs would possibly supplant human members for information assortment,” stated UPenn psychology professor Philip Tetlock, including, “In reality, LLMs have already demonstrated their capability to generate practical survey responses regarding shopper behaviour. Giant language fashions will revolutionize human-based forecasting within the subsequent 3 years. It will not make sense for people unassisted by AIs to enterprise probabilistic judgments in critical coverage debates. I put a 90 per cent likelihood on that. After all, how people react to all of that’s one other matter.”

Whereas opinions on the feasibility of this software of superior AI techniques range, research utilizing simulated members could possibly be used to generate novel hypotheses that might then be confirmed in human populations.

However the researchers warn of among the doable pitfalls on this strategy – together with the truth that LLMs are sometimes educated to exclude sociocultural biases that exist for real-life people. Because of this sociologists utilizing AI on this manner could not examine these biases.

Professor Daybreak Parker, a co-author of the article from the College of Waterloo, notes that researchers might want to set up tips for the governance of LLMs in analysis.

“Pragmatic considerations with information high quality, equity, and fairness of entry to the highly effective AI techniques can be substantial,” Parker stated, including, “So, we should be sure that people, teams, cultures, LLMs, like all scientific fashions, are open-source, that means that their algorithms and ideally information can be found to all to scrutinize, check, and modify. Solely by sustaining transparency and replicability can we be sure that AI-assisted social science analysis actually contributes to our understanding of human expertise.”