NEW DELHI: Meta has introduced that it’s going to begin to conceal extra forms of age-inappropriate content material for teenagers on Instagram and Fb, in keeping with skilled steering.

The corporate stated that they’re routinely putting teenagers into probably the most restrictive content material management setting on each platforms.

“We already apply this setting for brand spanking new teenagers once they be a part of Instagram and Fb and at the moment are increasing it to teenagers who’re already utilizing these apps,” Meta stated in a blogpost on Tuesday.

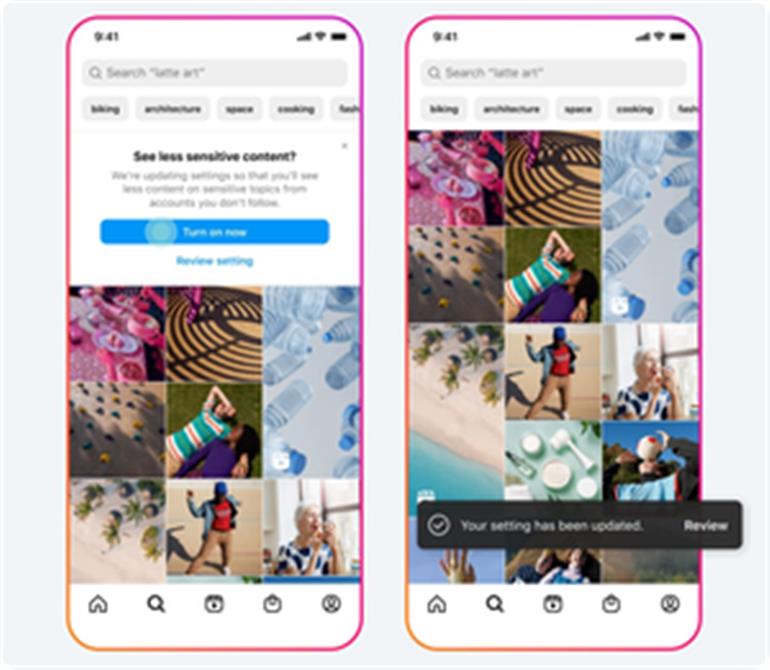

“Our content material suggestion controls — often known as ‘Delicate Content material Management’ on Instagram and ‘Cut back’ on Fb — make it tougher for folks to return throughout probably delicate content material or accounts in locations like Search and Discover,” it added.

Furthermore, the tech large acknowledged that it’s going to begin hiding outcomes associated to suicide, self-harm and consuming dysfunction when folks seek for these phrases and can direct them to skilled assets for assist.

“We already conceal outcomes for suicide and self-harm search phrases that inherently break our guidelines and we’re extending this safety to incorporate extra phrases,” Meta stated.

This replace will roll out for everybody over the approaching weeks.

To assist make certain teenagers are repeatedly checking their security and privateness settings on Instagram, and are conscious of the extra non-public settings out there, Meta stated they’re sending new notifications encouraging them to replace their settings to a extra non-public expertise with a single faucet.

If teenagers select to “Activate beneficial settings”, the corporate will routinely change their settings to limit who can repost their content material, tag or point out them, or embody their content material in Reels Remixes, the corporate defined.

The tech large can even guarantee solely their followers can message them and assist conceal offensive feedback.