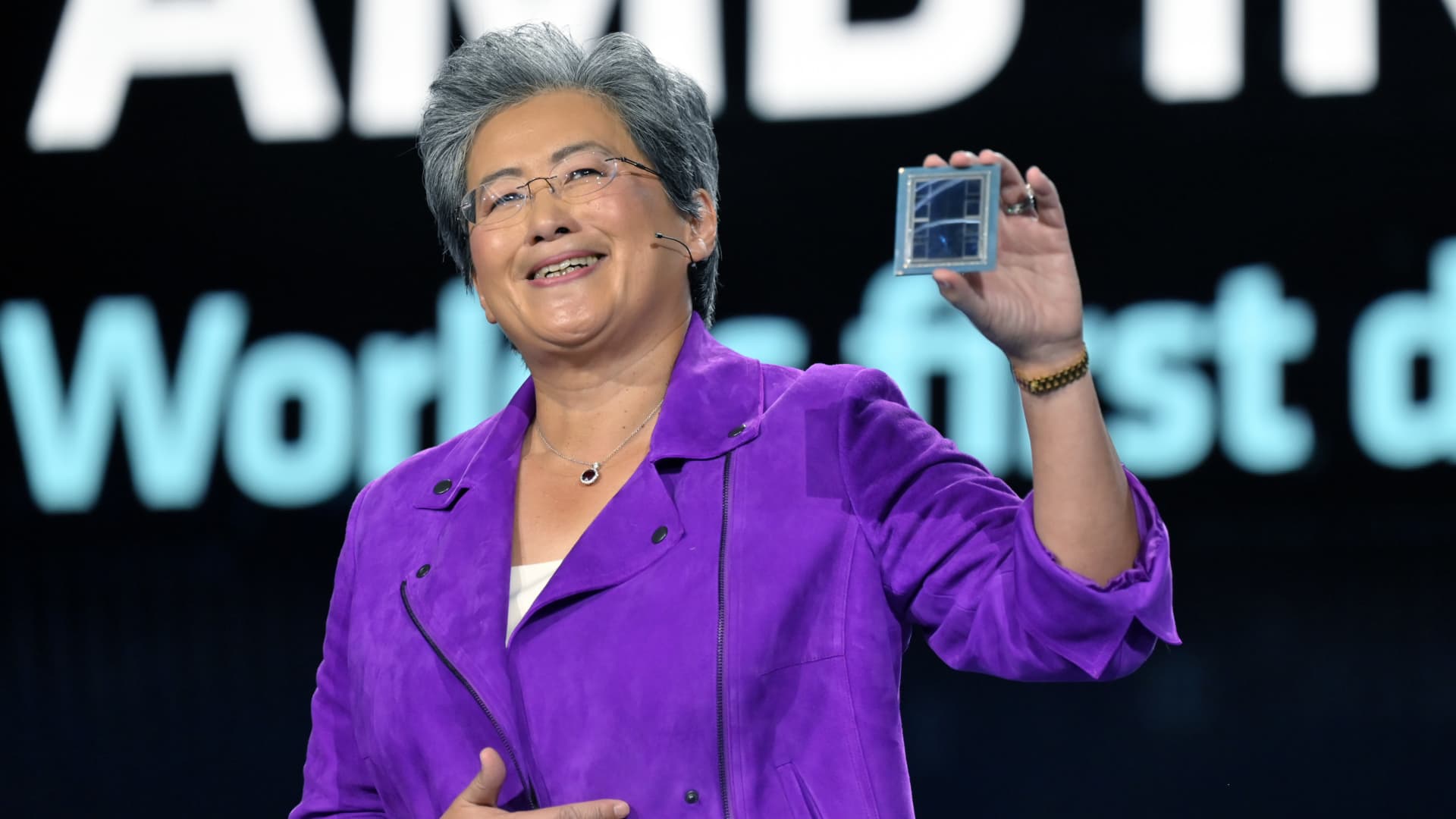

Lisa Su shows an ADM Intuition M1300 chip as she delivers a keynote tackle at CES 2023 at The Venetian Las Vegas on January 04, 2023 in Las Vegas, Nevada.

David Becker | Getty Pictures

Meta, OpenAI, and Microsoft stated at an AMD investor occasion on Wednesday they may use AMD’s latest AI chip, the Intuition MI300X. It is the largest signal thus far that know-how corporations are looking for options to the costly Nvidia graphics processors which have been important for creating and deploying synthetic intelligence packages like OpenAI’s ChatGPT.

If AMD’s newest high-end chip is nice sufficient for the know-how corporations and cloud service suppliers constructing and serving AI fashions when it begins transport early subsequent yr, it may decrease prices for growing AI fashions, and put aggressive stress on Nvidia’s surging AI chip gross sales progress.

“All the curiosity is in huge iron and large GPUs for the cloud,” AMD CEO Lisa Su stated on Wednesday.

AMD says the MI300X relies on a brand new structure, which frequently results in vital efficiency positive aspects. Its most distinctive characteristic is that it has 192GB of a cutting-edge, high-performance sort of reminiscence often called HBM3, which transfers information sooner and may match bigger AI fashions.

At an occasion for analysts on Wednesday, CEO Lisa Su instantly in contrast its Intuition MI300X and the techniques constructed with it to Nvidia’s principal AI GPU, the H100.

“What this efficiency does is it simply instantly interprets into a greater consumer expertise,” Su stated. “While you ask a mannequin one thing, you’d prefer it to come back again sooner, particularly as responses get extra sophisticated.”

The primary query dealing with AMD is whether or not corporations which were constructing on Nvidia will make investments the money and time so as to add one other GPU provider. “It takes work to undertake AMD,” Su stated.

AMD on Wednesday instructed traders and companions that it had improved its software program suite known as ROCm to compete with Nvidia’s trade commonplace CUDA software program, addressing a key shortcoming that had been one of many main the reason why AI builders at present desire Nvidia.

Value may even be vital — AMD did not reveal pricing for the MI300X on Wednesday, however Nvidia’s can price round $40,000 for one chip, and Su instructed reporters that AMD’s chip must price much less to buy and function than Nvidia with a view to persuade clients to purchase it.

Who says they’re going to the MI300X?

AMD MI300X accelerrator for synthetic intelligence.

On Wednesday, AMD stated it had already signed up a few of of the businesses most hungry for GPUs to make use of the chip. Meta and Microsoft have been the 2 largest purchasers of Nvidia H100 GPUs in 2023, in response to a latest report from analysis agency Omidia.

Meta stated that it’s going to use Intuition MI300X GPUs for AI inference workloads like processing AI stickers, picture enhancing, and working its assistant. Microsoft’s CTO Kevin Scott stated it will provide entry to MI300X chips via its Azure net service. Oracle‘s cloud may even use the chips.

OpenAI stated it will assist AMD GPUs in certainly one of its software program merchandise known as Triton, which is not a giant massive language mannequin like GPT, however is utilized in AI analysis to entry chip options.

AMD is not but forecasting huge gross sales for the chip but, solely projecting about $2 billion in complete information heart GPU income in 2024. Nvidia reported over $14 billion in information heart gross sales in the newest quarter alone, though that metric contains different chips beside GPUs.

Nonetheless, AMD says that the whole marketplace for AI GPUs may climb to $400 billion over the subsequent 4 years, doubling the corporate’s earlier projection, displaying how excessive expectations and the way coveted high-end AI chips have develop into — and why the corporate is now focusing investor consideration on the product line. Su additionally steered to reporters that AMD does not suppose that it must beat Nvidia to do properly out there.

“I believe it is clear to say that Nvidia needs to be the overwhelming majority of that proper now,” Su instructed reporters, referring to the AI chip market. “We imagine it may very well be $400-billion-plus in 2027. And we may get a pleasant piece of that.”