Mark Zuckerberg, CEO of Meta, attends a U.S. Senate bipartisan Synthetic Intelligence Perception Discussion board on the U.S. Capitol in Washington, D.C., Sept. 13, 2023.

Stefani Reynolds | AFP | Getty Photographs

In its newest quarterly report on adversarial threats, Meta mentioned on Thursday that China is an rising supply of covert affect and disinformation campaigns, which might get supercharged by advances in generative synthetic intelligence.

Solely Russia and Iran rank above China relating to coordinated inauthentic conduct (CIB) campaigns, usually involving the usage of pretend person accounts and different strategies meant to “manipulate public debate for a strategic purpose,” Meta mentioned within the report.

Meta mentioned it disrupted three CIB networks within the third quarter, two stemming from China and one from Russia. One of many Chinese language CIB networks was a big operation that required Meta to take away 4,780 Fb accounts.

“The people behind this exercise used primary pretend accounts with profile photos and names copied from elsewhere on the web to publish and befriend folks from world wide,” Meta mentioned concerning China’s community. “Solely a small portion of such associates had been primarily based in america. They posed as Individuals to publish the identical content material throughout totally different platforms.”

Disinformation on Fb emerged as a significant drawback forward of the 2016 U.S. elections, when overseas actors, most notably from Russia, had been in a position to inflame sentiments on the positioning, primarily with the intention of boosting the candidacy of then-candidate Donald Trump. Since then, the corporate has been below better scrutiny to observe disinformation threats and campaigns and to offer better transparency to the general public.

Meta eliminated a previous China-related disinformation marketing campaign, as detailed in August. The corporate mentioned it took down over 7,700 Fb accounts associated to that Chinese language CIB community, which it described on the time because the “largest recognized cross-platform covert affect operation on this planet.”

If China turns into a political speaking level as a part of the upcoming election cycles world wide, Meta mentioned “it’s probably that we’ll see China-based affect operations pivot to try to affect these debates.”

“As well as, the extra home debates in Europe and North America deal with assist for Ukraine, the extra probably that we must always count on to see Russian makes an attempt to intervene in these debates,” the corporate added.

One development Meta has seen concerning CIB campaigns is the rising use of a wide range of on-line platforms like Medium, Reddit and Quora, versus the dangerous actors “centralizing their exercise and coordination in a single place.”

Meta mentioned that improvement seems to be associated to “bigger platforms maintaining the stress on menace actors,” leading to troublemakers swiftly using smaller websites “within the hope of going through much less scrutiny.”

The corporate mentioned the rise of generative AI creates further challenges relating to the unfold of disinformation, however Meta mentioned it hasn’t “seen proof of this know-how being utilized by recognized covert affect operations to make hack-and-leak claims.”

Meta has been investing closely in AI, and considered one of its makes use of is to assist determine content material, together with computer-generated media, that might violate firm insurance policies. Meta mentioned almost 100 impartial fact-checking companions will assist overview any questionable AI-generated content material.

“Whereas the usage of AI by recognized menace actors we have seen thus far has been restricted and never very efficient, we need to stay vigilant and put together to reply as their ways evolve,” the report mentioned.

Nonetheless, Meta warned that the upcoming elections will probably imply that “the defender neighborhood throughout our society wants to organize for a bigger quantity of artificial content material.”

“Because of this simply as probably violating content material could scale, defenses should scale as effectively, along with persevering with to implement in opposition to adversarial behaviors which will or could not contain posting AI-generated content material,” the corporate mentioned.

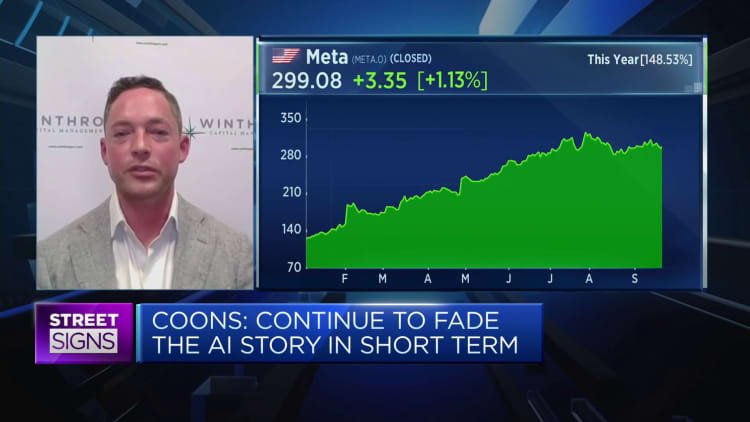

WATCH: Meta is an organization with an “identification disaster.”