Nvidia is on a tear, and it does not appear to have an expiration date.

Nvidia makes the graphics processors, or GPUs, which are wanted to construct AI functions like ChatGPT. Specifically, there’s excessive demand for its highest-end AI chip, the H100, amongst tech firms proper now.

Nvidia’s general gross sales grew 171% on an annual foundation to $13.51 billion in its second fiscal quarter, which ended July 30, the corporate introduced Wednesday. Not solely is it promoting a bunch of AI chips, however they’re extra worthwhile, too: The corporate’s gross margin expanded over 25 proportion factors versus the identical quarter final yr to 71.2% — unbelievable for a bodily product.

Plus, Nvidia stated that it sees demand remaining excessive via subsequent yr and stated it has secured improve provide, enabling it to extend the variety of chips it has available to promote within the coming months.

The corporate’s inventory rose greater than 6% after hours on the information, including to its exceptional acquire of greater than 200% this yr to date.

It is clear from Wednesday’s report that Nvidia is profiting extra from the AI increase than another firm.

Nvidia reported an unbelievable $6.7 billion in internet revenue within the quarter, a 422% improve over the identical time final yr.

“I feel I used to be excessive on the Avenue for subsequent yr coming into this report however my numbers must go means up,” wrote Chaim Siegel, an analyst at Elazar Advisors, in a observe after the report. He lifted his worth goal to $1,600, a “3x transfer from right here,” and stated, “I nonetheless suppose my numbers are too conservative.”

He stated that worth suggests a a number of of 13 occasions 2024 earnings per share.

Nvidia’s prodigious cashflow contrasts with its prime clients, that are spending closely on AI {hardware} and constructing multi-million greenback AI fashions, however have not but began to see revenue from the know-how.

About half of Nvidia’s information middle income comes from cloud suppliers, adopted by huge web firms. The expansion in Nvidia’s information middle enterprise was in “compute,” or AI chips, which grew 195% in the course of the quarter, greater than the general enterprise’s development of 171%.

Microsoft, which has been an enormous buyer of Nvidia’s H100 GPUs, each for its Azure cloud and its partnership with OpenAI, has been rising its capital expenditures to construct out its AI servers, and does not anticipate a optimistic “income sign” till subsequent yr.

On the patron web entrance, Meta stated it expects to spend as a lot as $30 billion this yr on information facilities, and presumably extra subsequent yr as it really works on AI. Nvidia stated on Wednesday that Meta was seeing returns within the type of elevated engagement.

Some startups have even gone into debt to purchase Nvidia GPUs in hopes of renting them out for a revenue within the coming months.

On an earnings name with analysts, Nvidia officers gave some perspective about why its information middle chips are so worthwhile.

Nvidia stated its software program contributes to its margin and that it’s promoting extra difficult merchandise than mere silicon. Nvidia’s AI software program, known as Cuda, is cited by analysts as the first purpose why clients cannot simply change to opponents like AMD.

“Our Information Middle merchandise embody a major quantity of software program and complexity which can be serving to for gross margins,” Nvidia finance chief Colette Kress stated on a name with analysts.

Nvidia can be compiling its know-how into costly and sophisticated techniques like its HGX field, which mixes eight H100 GPUs right into a single pc. Nvidia boasted on Wednesday that constructing one in every of these bins makes use of a provide chain of 35,000 components. HGX bins can value round $299,999, in line with experiences, versus a quantity worth of between $25,000 and $30,000 for a single H100, in line with a current Raymond James estimate.

Nvidia stated that because it ships its coveted H100 GPU out to cloud service suppliers, they’re usually choosing the extra full system.

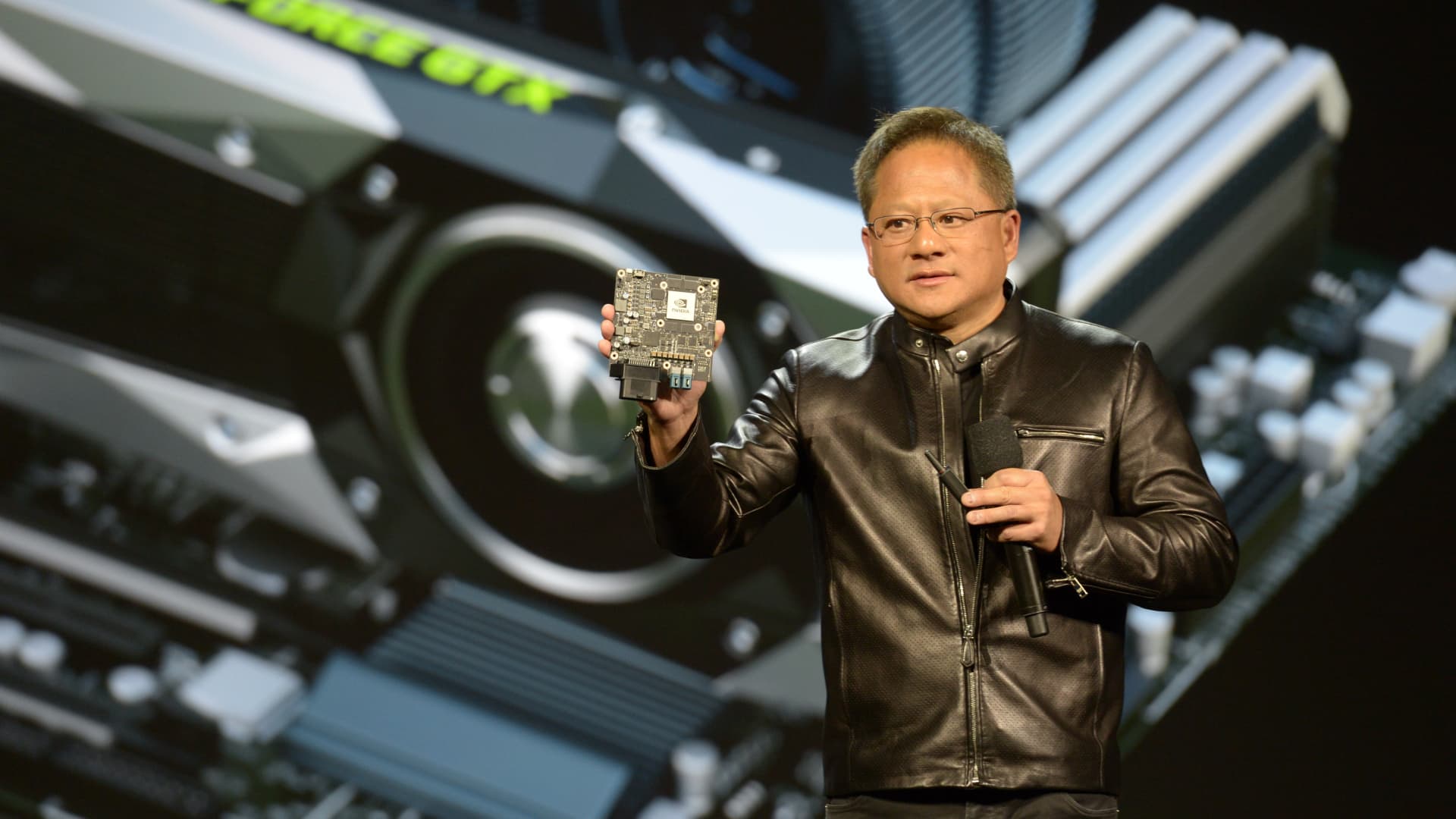

“We name it H100, as if it is a chip that comes off of a fab, however H100s exit, actually, as HGX to the world’s hyperscalers and so they’re actually fairly massive system elements,” Nvidia CEO Jensen Huang stated on a name with analysts.