Jensen Huang, president of Nvidia, holding the Grace hopper superchip CPU used for generative AI on the Supermicro keynote presentation throughout Computex 2023.

Walid Berrazeg | Lightrocket | Getty Photographs

Nvidia on Monday unveiled the H200, a graphics processing unit designed for coaching and deploying the sorts of synthetic intelligence fashions which can be powering the generative AI increase.

The brand new GPU is an improve from the H100, the chip OpenAI used to coach its most superior massive language mannequin, GPT-4. Huge firms, startups and authorities companies are all vying for a restricted provide of the chips.

H100 chips value between $25,000 and $40,000, in accordance with an estimate from Raymond James, and 1000’s of them working collectively are wanted to create the most important fashions in a course of known as “coaching.”

Pleasure over Nvidia’s AI GPUs has supercharged the corporate’s inventory, which is up greater than 230% up to now in 2023. Nvidia expects round $16 billion of income for its fiscal third quarter, up 170% from a 12 months in the past.

The important thing enchancment with the H200 is that it contains 141GB of next-generation “HBM3” reminiscence that can assist the chip carry out “inference,” or utilizing a big mannequin after it is skilled to generate textual content, pictures or predictions.

Nvidia stated the H200 will generate output practically twice as quick because the H100. That is primarily based on a take a look at utilizing Meta’s Llama 2 LLM.

The H200, which is anticipated to ship within the second quarter of 2024, will compete with AMD’s MI300X GPU. AMD’s chip, much like the H200, has extra reminiscence over its predecessors, which helps match huge fashions on the {hardware} to run inference.

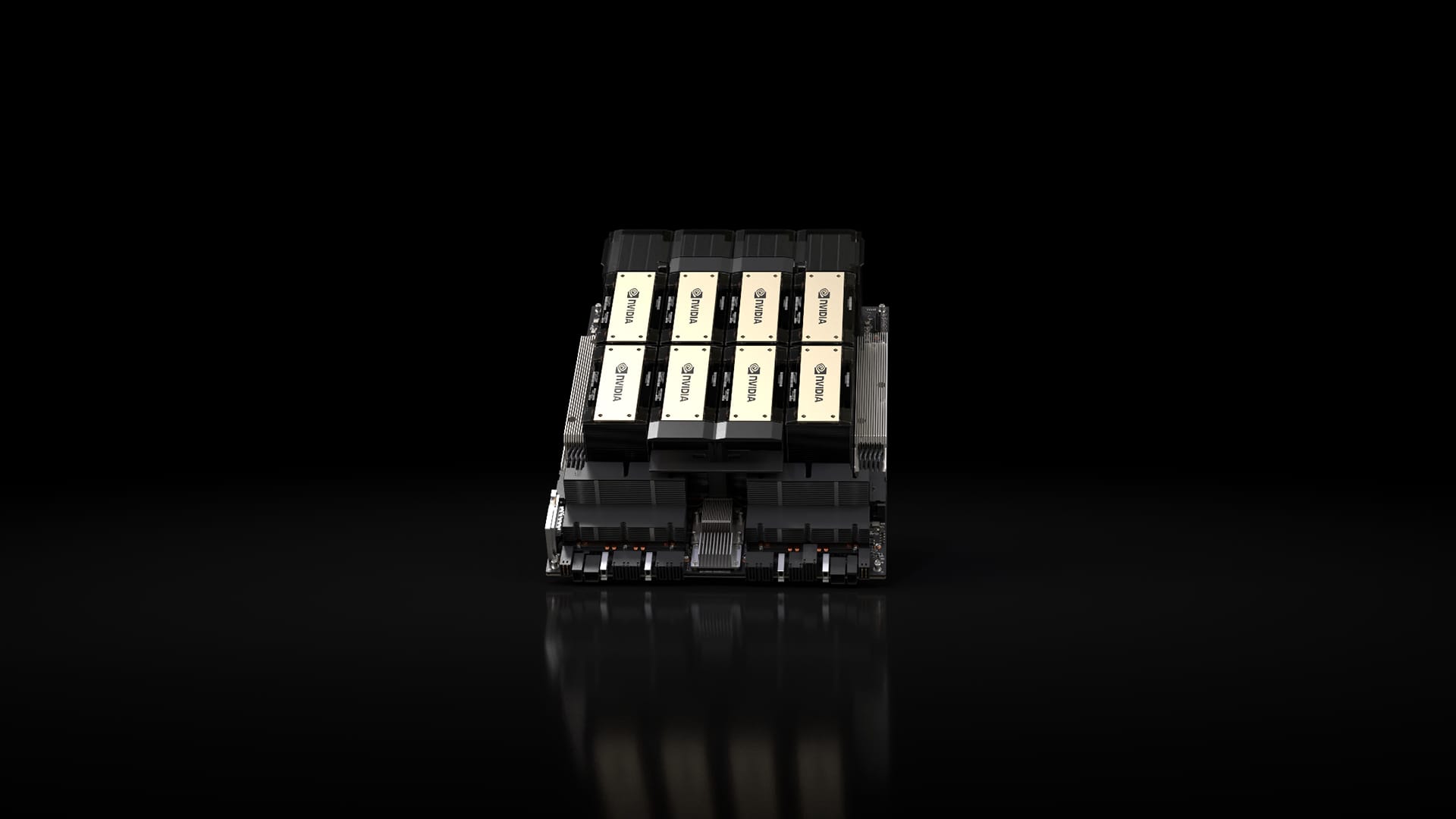

Nvidia H200 chips in an eight-GPU Nvidia HGX system.

Nvidia

Nvidia stated the H200 shall be appropriate with the H100, which means that AI firms who’re already coaching with the prior mannequin will not want to vary their server methods or software program to make use of the brand new model.

Nvidia says will probably be out there in four-GPU or eight-GPU server configurations on the corporate’s HGX full methods, in addition to in a chip known as GH200, which pairs the H200 GPU with an Arm-based processor.

Nevertheless, the H200 might not maintain the crown of the quickest Nvidia AI chip for lengthy.

Whereas firms like Nvidia supply many alternative configurations of their chips, new semiconductors typically take an enormous step ahead about each two years, when producers transfer to a special structure that unlocks extra vital efficiency beneficial properties than including reminiscence or different smaller optimizations. Each the H100 and H200 are primarily based on Nvidia’s Hopper structure.

In October, Nvidia informed traders that it will transfer from a two-year structure cadence to a one-year launch sample resulting from excessive demand for its GPUs. The corporate displayed a slide suggesting it is going to announce and launch its B100 chip, primarily based on the forthcoming Blackwell structure, in 2024.

WATCH: We’re an enormous believer within the AI development going into subsequent 12 months

Do not miss these tales from CNBC PRO: